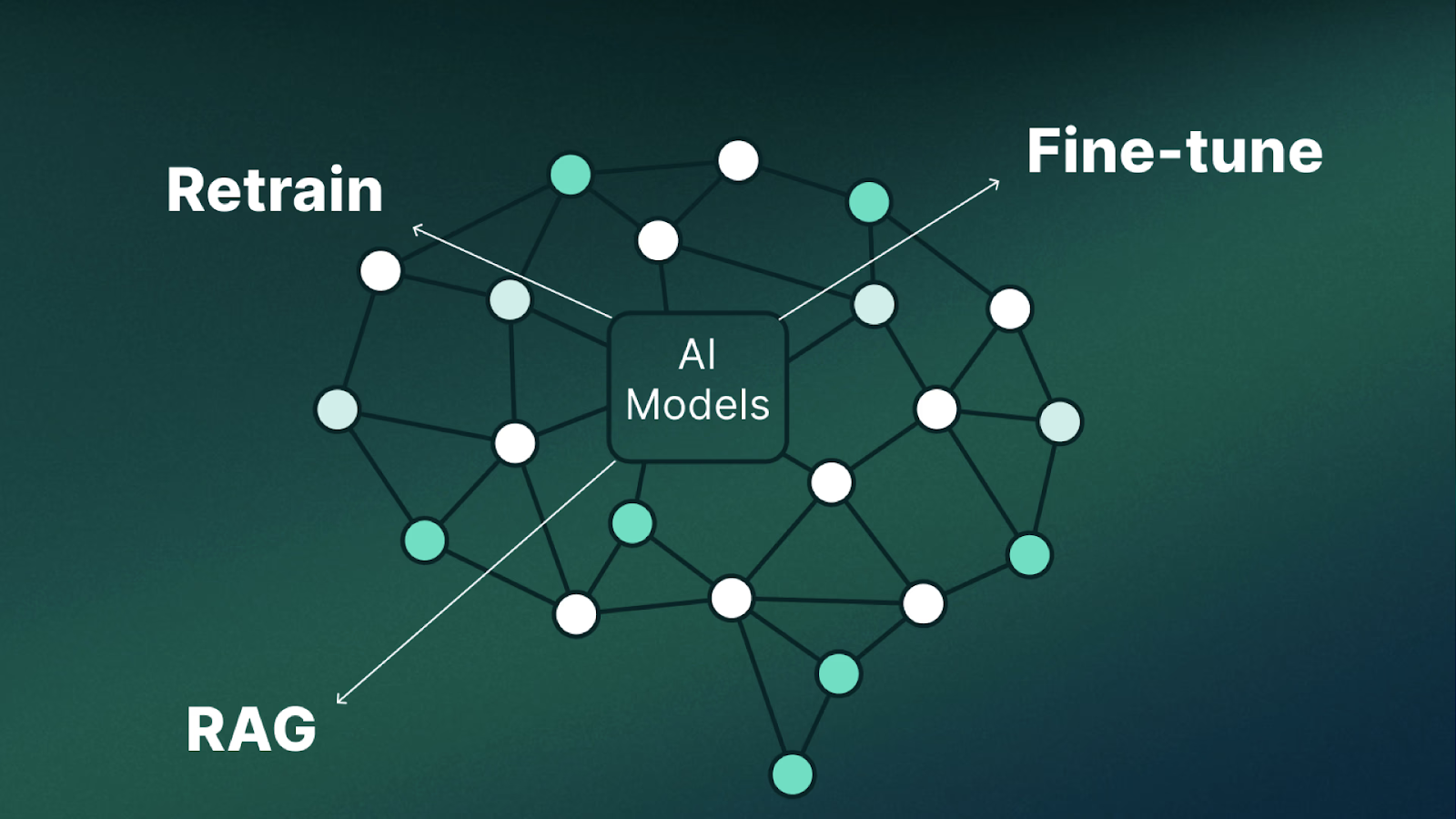

In corporate search, two paths dominate conversation: Retrieval-Augmented Generation (RAG) and retraining (fine-tuning or full model training). Picking the right one affects accuracy, latency, governance, and total cost. Think of it like comparing lines on a betting slip: you weigh confidence, price, and timing—just as you would when scanning odds from EPL bookmakers for the new season before placing a wager. The smartest choice depends on your data landscape, risk tolerance, and operational constraints.

Table of Contents

ToggleWhat RAG Solves in Corporate Search

RAG couples a general-purpose language model with live retrieval from your document stores or APIs. This pattern shines when your knowledge changes often, compliance teams need traceable citations, and you want to keep model behavior stable while swapping in new content. It also helps when multiple systems—wikis, ticketing tools, data catalogs—must feed a single answer.

Key strengths and where it fits

- Fast time-to-value: You can point a retriever at existing sources (SharePoint, Confluence, Google Drive, CRM, code repos) and start answering questions with references.

- Data freshness: Answers reflect the current state of policies, specs, or pricing without re-training a model every time content changes.

- Traceability: Citations let audit or legal teams verify where a statement came from.

- Security patterns that map to reality: Access controls can be enforced at retrieval time; a user only gets passages they’re permitted to view.

- Lower model risk: You’re not changing model weights; you’re changing what the model reads at request time.

- Multi-source fusion: The same question can pull from policy docs, service runbooks, and Jira tickets in one response.

Takeaway: Use RAG when your knowledge base updates frequently, you require source citations, and access control must follow user permissions without rebuilding a model.

When Retraining a Model Makes Sense

Retraining—or fine-tuning—means updating a model’s weights with your domain examples. This path is valuable when your organization uses heavy jargon, shorthand, or structured patterns that a generic model keeps missing, even with good retrieval. It’s also helpful for tasks that are style- or workflow-specific (e.g., drafting responses in a tightly controlled tone, or labeling support tickets with your internal taxonomy).

Side-by-side comparison to help you choose

| Dimension | RAG | Retraining (Fine-tune/full) |

| Time to first results | Days to a few weeks once content is indexed | Weeks to months (data prep, training, eval) |

| Data freshness | Naturally up-to-date via re-indexing | Requires new training cycles |

| Citations | Native (return passages/links) | Not inherent; must add retrieval or logging |

| Handling internal jargon | Good if examples exist in sources; may still miss nuance | Strong once trained on curated examples |

| Access control | Enforced at query time (document-level or row-level) | Must be layered on top; weights don’t “hide” secrets |

| Latency | Extra hop for retrieval; often acceptable with caching | Can be fast once deployed, but no live citations |

| Cost profile | Infra for indexing/vector DB, cheaper on model usage | Data engineering + MLOps; higher up-front spend |

| Failure modes | Retrieval misses, chunking errors, weak reranking | Drift, overfitting, costly retrains |

| Vendor lock-in | Lower if using open retrievers/stores | Higher if tied to a specific training stack |

| Governance | Strong: show sources, easy to audit | Heavier process to prove why the model said something |

Takeaway: Retraining pays off when your workforce speaks in domain shorthand, classification needs are strict, or you must produce outputs in a tight house style—even when the source text is messy. Expect more up-front work and stronger ongoing MLOps discipline.

How to Decide: A Practical Framework

You don’t always need to pick only one. Many enterprises run RAG first, then fine-tune where gaps persist. The questions below help map your next move.

Introduce the checklist

Start with the business problem, not the algorithm. Then look at data freshness requirements, access control, and the level of traceability your auditors expect. Finally, pressure-test cost, latency, and who will own the system long-term.

Decision checklist

- How fast does knowledge change? If policies, SKUs, or org charts move weekly, RAG leads.

- Do you need citations for audit or trust? If yes, RAG is the default; retraining can layer on later.

- Does your workforce use heavy jargon or templated outputs? If yes, plan for a fine-tune after a RAG pilot.

- What are your access-control constraints? If permissions vary by user or team, retrieval-time filtering is simpler.

- Budget and ownership: Do you have MLOps capacity for data curation, evaluation, and re-training cycles?

- Latency targets: Customer-facing chat may need aggressive response times; use caching, smaller context windows, or partial fine-tunes.

- Risk posture: If an incorrect answer creates legal exposure, lean on approaches that show sources and reduce hallucination.

Takeaway: Start lean and observable. Use RAG to learn where the model fails; fine-tune only where errors repeat and carry real business impact.

Making RAG Work in the Real World (without a new platform rewrite)

RAG succeeds or fails on retrieval quality. Garbage in, garbage out. Focus on content health: de-duplicate, remove obsolete docs, and standardize titles so retrievers find the right passages. Chunking strategies matter—policy PDFs might need paragraph-level chunks, while API docs benefit from heading-aware splits. Rerankers can lift relevance when your corpus is large and uneven.

Observability is non-negotiable. Log top queries, track which sources were cited, and review “no result” paths. Add feedback buttons so users can mark good and bad answers. A weekly triage turns that feedback into content fixes (archiving stale docs) or retrieval tweaks (new synonyms, better chunk size). With that loop in place, the system keeps improving without touching model weights.

On performance and spend: cache frequent queries, pre-compute embeddings on a schedule, and set sensible timeouts for slow connectors. If latency spikes, shrink the candidate set with a strict lexical pre-filter before vector search, then rerank a small pool.

Where Retraining Delivers Outsized Value

Some tasks don’t rely on a single source of truth. Think classification (“route this ticket to the right squad”), short-form drafting in a house tone, or extracting structured fields from messy contracts. Here, a fine-tune trained on a few thousand high-quality examples can lift precision and consistency. Pair it with a smaller retrieval step for citations when needed. Keep a hold-out test set that mirrors production, not a synthetic sample that flatters the model.

Plan the lifecycle early: define when to refresh the dataset, who approves labels, and how you measure drift. If the model’s outputs start to wander—longer answers, odd vocabulary, changing rates of “I don’t know”—that’s your cue to re-label tricky cases and run a small update, not a giant rebuild.

A Simple Rollout Play That Works

Phase 1: run a RAG pilot for a constrained domain (e.g., HR policies or support runbooks). Set guardrails: always cite, refuse when confidence is low, and route tough questions to a human queue. Track metrics that matter: first-contact resolution, average handle time, and escalation rate.

Phase 2: once retrieval is stable, identify two or three error clusters the pilot cannot shake—jargon requests, repetitive classification tasks, or style-heavy replies. Build a compact fine-tune on those cases. Keep it surgical. The point is to fix a specific gap, not to chase leaderboard scores.

Phase 3: productize with clear ownership. IT or platform teams manage connectors and indexing; knowledge owners keep content clean; a small AI ops pod monitors logs, feedback, and safety rules.

Final Thoughts

Both paths can raise answer quality for employees and customers, but they shine under different constraints. RAG is the fastest way to wire corporate knowledge straight into a model with citations and permission checks. Retraining is the tool for language and structure that are unique to your business. Many teams do both: retrieval for truth, fine-tuning for voice and workflow. Start where risk is lowest, prove value quickly, then expand with careful scope. Like picking the right market on a Saturday fixture list, the winning move is to balance odds, cost, and timing—and place your bet only after you’ve checked the data twice.

Wayne is a unique blend of gamer and coder, a character as colorful and complex as the worlds he explores and the programs he crafts. With a sharp wit and a knack for unraveling the most tangled lines of code, he navigates the realms of pixels and Python with equal enthusiasm. His stories aren’t just about victories and bugs; they’re about the journey, the unexpected laughs, and the shared triumphs. Wayne’s approach to gaming and programming isn’t just a hobby, it’s a way of life that encourages curiosity, persistence, and, above all, finding joy in every keystroke and every quest.